The bias embedded in tech

Women and people of colour are fighting many battles in tech and in the fast-growing world of artificial intelligence

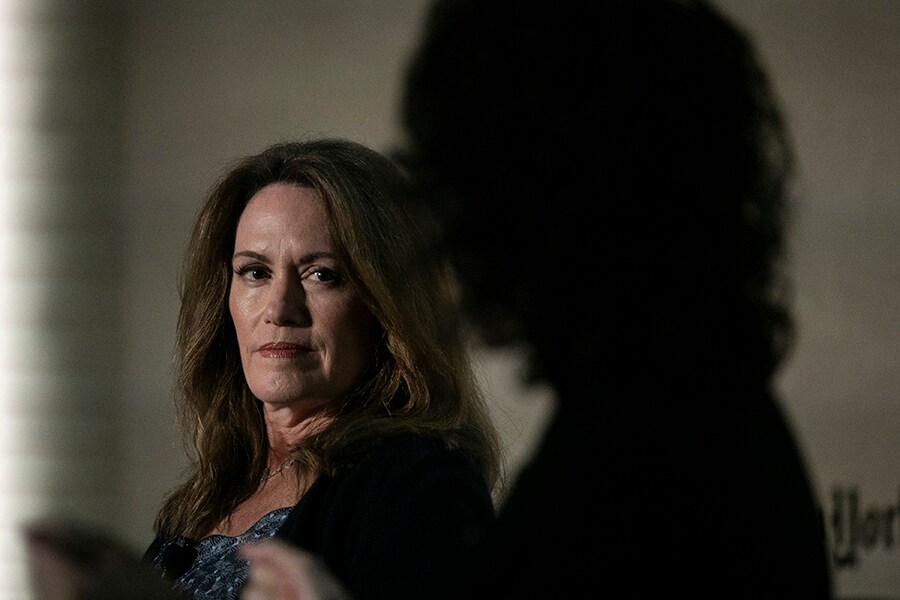

Peggy Johnson, executive vice president of business development at Microsoft, in New York, June 13, 2019. It’s important to have diverse teams when it’s just white men doing the programming, artificial intelligence systems are based on how they act. “It’s so discouraging to think this is still going on,” Johnson said. “But what I always think the way to turn anything around is to shine a light on it." (Jeenah Moon/The New York Times)[br]In 2010, Kinect for Microsoft’s Xbox gaming system, in which users played with gestures and spoken commands rather than a controller, was ready to go to market.

But just before it did, a woman who worked for the company took the game home to play with her family, and a funny thing happened. The motion-sensing devices worked just fine for her husband, but not so much for her or her children.

That is because the system had been tested on men ages 18-35 and did not recognize as well the body motions of women and children, said Peggy Johnson, executive vice president of business development at Microsoft.

And that, Johnson told an audience at the New Rules Summit, hosted by The New York Times, is what happens when teams are not diverse.

“You want diverse teams because it’s good to have diverse teams, but that could have had a real business impact,” she said. “It could have been disastrous.”

Women and people of color are fighting many battles in the tech world and in the fast-growing world of artificial intelligence. The other panel member, Meredith Whittaker, a founder and a director of the AI Now Institute at New York University, noted that voice recognition tools that rely on AI often do not recognize higher-pitched voices.

And, she said, it has been well publicized that facial recognition is far more accurate with lighter-skin men than with women and, especially, with darker-skin people.

That’s because if white men are used much more frequently to train AI software, it will much more easily recognize their voices and faces.

“This is a classic example of AI bias,” said Whittaker, who also works at Google. “It’s almost never white men who are discriminated against by these systems. They replicate historical marginalization.”

Other examples: A Microsoft customer was testing a financial-services algorithm that did risk scoring for loans. “As they were training the data set, the data was of previously approved loans that largely were for men,” Johnson said. “The algorithm clearly said men are a better risk.” Parents need support as their children grow older, and there are few policies that address it. (Shonagh Rae/The New York Times)

Parents need support as their children grow older, and there are few policies that address it. (Shonagh Rae/The New York Times)

And the “canonical example,” Whittaker said, was Amazon’s efforts to use AI for hiring the computer models were being trained using résumés submitted over the past 10 years, and most came from men. Therefore it was “taught” that men were better job candidates.

“It was penalizing résumés that had the word ‘women’ in it, such as if you went to a women’s college,” she said. “Your résumé was spit out.”

Not only does this show how easily algorithms can reflect society’s bias, but it also “exposed years and years of discriminatory hiring practices at Amazon,” she said, “showcasing very starkly the lack of diversity.”

Amazon tried to change the algorithm, but eventually abandoned it.

Whittaker said the aim should not just be about creating good algorithms, because they would still reflect structural biases in society in how they are used, such as “replicating discriminatory policing practices or amplifying surveillance or worker control.”

For example, she said, tenants in Brooklyn are fighting a landlord who wants to replace a lock and key entry system with facial recognition. In a letter opposing that, the AI Now institute supported the tenants’ fear of increased surveillance and that the inaccuracy in facial recognition, especially of nonwhites, would lead them to be locked out.

“Let’s not just talk about statistical parity within a given AI system, but just and equitable uses of AI systems,” she said.

The bad news, Whittaker said, is that as tech companies become more powerful, they also become less diverse.

“The people at the top look more and more the same,” she said. And fewer, not more, women are getting bachelor’s degrees in computer science. According to the National Center for Education Statistics, 28% of bachelor’s degrees in computer and information science went to women in the year 2000 in 2016 it was 18%.

Whittaker helped organize an international walkout at Google last year to protest the company’s handling of sexual harassment, an event that she said was the culmination of years of frustration.

“A lot of people who had worked within Google in good faith for a long time said we need new forms of pushback,” she said. “We can’t keep showing up to your panels and keep serving on your diversity committees. We need to achieve structural change.

“The problems are not simply that we’re not getting promotions, or opportunity inequity or pay inequity or that women and people of color are more often slotted into contract roles instead of hired full time, but it is also affecting the world beyond. The products that are created in these environments reflect these cultures, and that’s having an impact on billions of people.”

Whittaker said she and her co-organizer of the walkout, Claire Stapleton, faced retaliation for their political activism Stapleton left Google this month.

“It’s so discouraging to think this is still going on,” said Johnson, who is an engineer and has been in the technology field for over 30 years. “But what I always think the way to turn anything around is to shine a light on it. You keep the light on there until the data surfaces, and then you have to find a way to fix it. It’s not a ‘check the box and move on, everyone take unconscious bias training and we’re all good now.’ You have to keep at it, keep at it, keep at it.”

First Published: Jun 17, 2019, 11:02

Subscribe Now