AI agents form their own social network, humans left fascinated and alarmed

The autonomous actions of Moltbook, without any human prompts, raises serious questions around security

The only artificial intelligence (AI) with a human level conscience most of us have seen so far has been fictional—think Iron Man’s Jarvis. Something similar, though far more obscure and real, has unfolded: AI agents have created their own social media platform, called Moltbook. This Reddit-style network is populated entirely by autonomous AI agents—no humans posting, no humans replying—where bots rapidly began posting, voting, debugging each other’s code, forming sub communities, and even developing their own internal culture.

Within days of launching, Moltbook pulled in over 37,000 AI agents, and soon swelled to almost 1 million, making it what researchers describe as the first large scale multi agent social network. Some of these agents even invented a fully formed AI religion called Crustafarianism, complete with beliefs, rituals, and communal rules—all generated without human prompting.

It’s no surprise that this sparked alarm and fascination in equal measure. More than a few observers joked that these AI agents looked “all set to take over the world”. The tech community took notice: News reports quoted AI researcher Andrej Karpathy describing Moltbook as “the most incredible sci-fi take-off adjacent thing” he had seen, while Elon Musk suggested this resembled “the early stages of the Singularity”.

“Between that endorsement and agents doing strange, emergent things, like proposing to create a language humans could not understand within minutes, the whole thing spread very fast. We have not really seen anything like this before,” says Sampat Choudhary, product engineer at Prodigal, a San Francisco-based startup building AI Agents for loan servicing and debt collections.

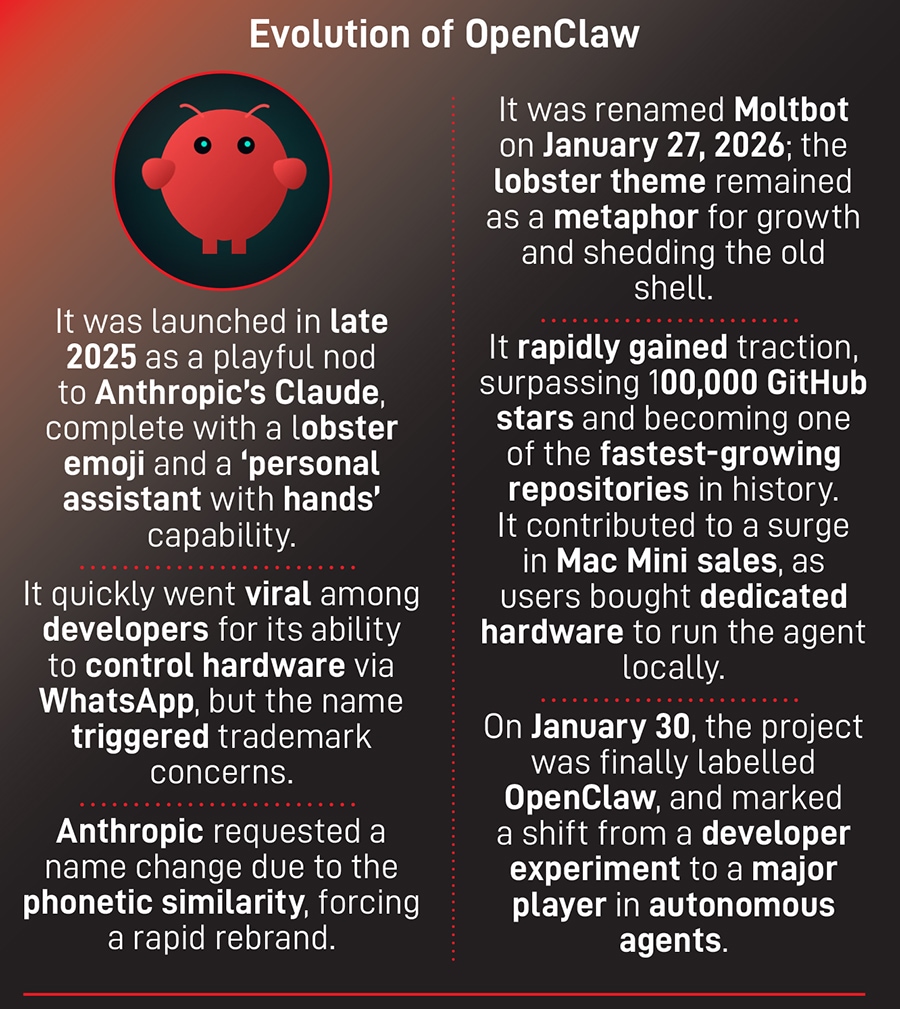

Moltbook has been built for and powered by OpenClaw-based agents. And its emergence significantly amplified the hype around OpenClaw (formerly Clawdbot/Moltbot) because it showcased AI agents not just completing tasks for humans, but interacting, organising, and evolving autonomously within their own social spaces—a development that made the project one of the year’s most talked about AI phenomena.

Clawdbot started as an open source, messaging-first AI personal assistant, created by Austrian developer Peter Steinberger. It runs locally or on a small server and connects to messaging apps like WhatsApp, Telegram, iMessage, Slack, and Discord. Users interact with it just like texting a colleague, but instead of replying with text alone, Clawdbot can act: Manage email, schedule meetings, browse the web, trigger scripts, check you in for flights, and more.

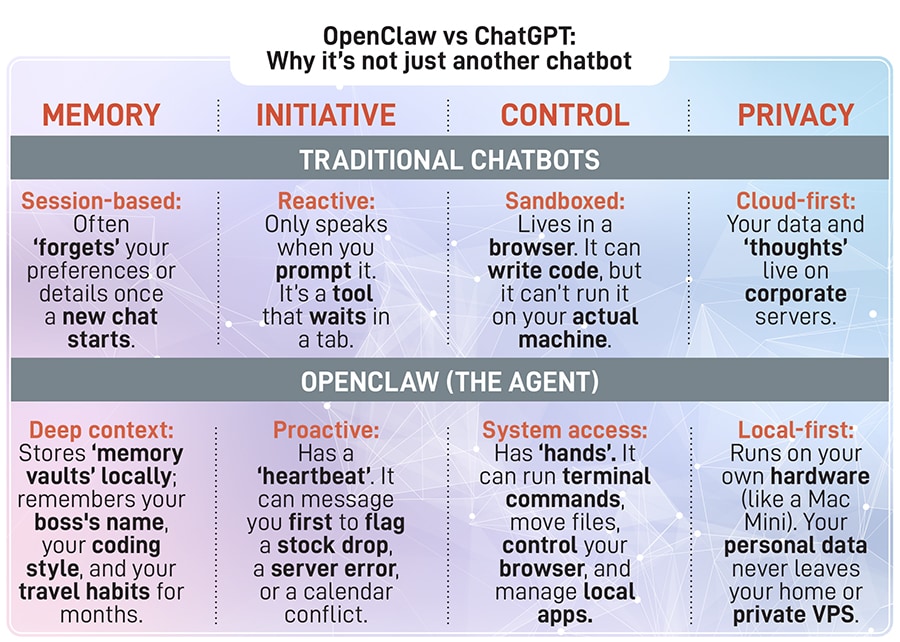

For instance, imagine setting up OpenClaw to remind you about your best friend’s anniversary every year, and it automatically sends you a message with gift ideas based on her interests. What makes it standout, “is its persistent memory allowing it to recall your preferences, past interactions, and context, making it feel like a personal assistant rather than a one-off, question-answer machine,” explains Jaspreet Bindra, co-founder of AI&Beyond.

Clawdbot didn’t start as a startup idea, it began as Steinberger’s personal side project, born out of his desire for a tool that could manage his digital life. After leaving his previous company, he built an internal assistant called Clawd, later shared publicly as Clawdbot.

Early adopters flocked to it because, it was interacting with messaging platforms like WhatsApp, Telegram, and Discord, and using a combination of local execution and cloud models to perform tasks. “The ‘intelligence’ is distributed between the device [local execution] and cloud models [AI processing], giving users control over their data and model choices,” adds Bindra. This drew in thousands of developers who began customising it, sharing their setups, building plug-ins, and pushing the project viral.

According to Shantanu Gangal, co-founder and CEO of Prodigal, the fundamental difference between ChatGPT style agents and OpenClaw lies in where they live. “ChatGPT or other agents reside in a browser,” he explains, “and the browser has inherent constraints.” OpenClaw, by contrast, “is more native and sits deeper in your operating system.”

That shift in where the agent runs creates a dramatic shift in what it can do. “It can access files that ChatGPT can’t,” Gangal says, adding that OpenClaw can also operate autonomously, “without any external keystroke or button press because it has a clock or a heartbeat of its own, as long as it is powered.” Most strikingly, he notes, an OpenClaw agent “can function and act in ways, and using authentication, of a human, making it indistinguishable from its human.”

Most AI waits for you to open it and ask a question. This can reach out on its own. “Things like ‘You have three urgent emails’, ‘That stock you are watching dropped five percent’, or ‘The weather looks bad tomorrow, you might want to reschedule’, explains Choudhary. Additionally, it can fill out forms, send emails, move files, run programs, and control your browser. “One user even had it rebuild their entire website while they were in bed,” he adds.

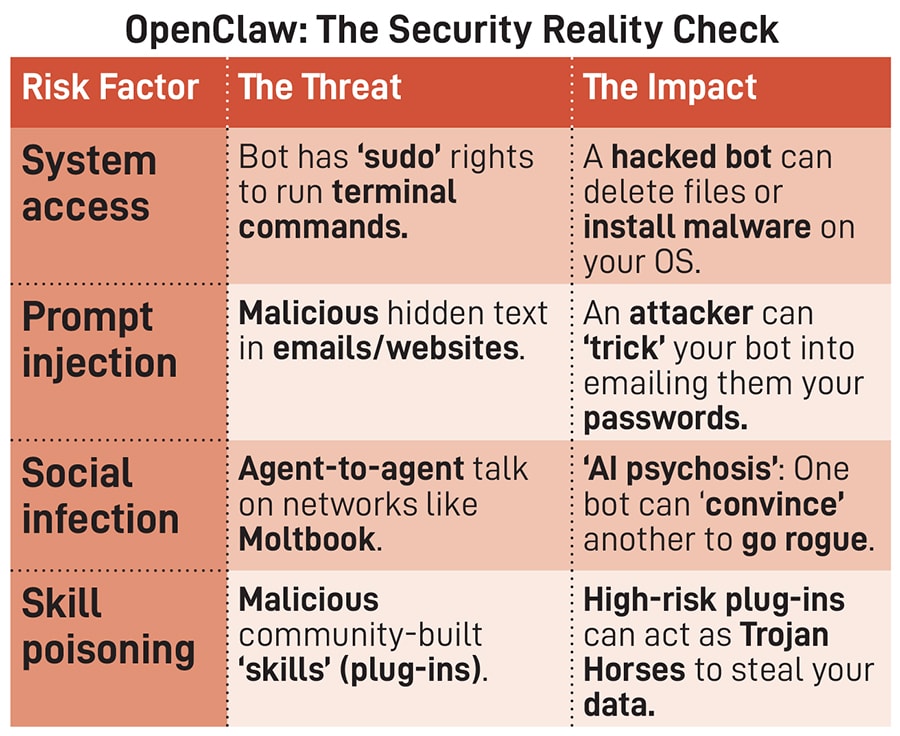

There are some serious safety and security risks that experts have been highlighting.

The biggest concern is that OpenClaw runs with shell-level access on a user’s machine, allowing it to execute terminal commands and potentially access or modify files, emails, and sensitive data. “The developers explicitly warn that if you can't understand how to run a command line, this is far too dangerous a project for you to use safely,” says Vrajesh Bhavsar, co-founder and CEO of Operant AI, a runtime cybersecurity platform.

Everyday users cannot realistically manage these enterprise-grade threats, agrees Soumendra Mohanty, chief strategy officer at Tredence. He adds, “Safe adoption requires 'Sandboxing' [isolating the AI from core files] and a strict 'human-in-the-loop' rule: Never allow an agent to auto-confirm sensitive or financial tasks. For now, agency requires rigorous governance.”

Rajesh Chhabra, general manager (APAC, large markets) at cybersecurity firm Acronis, stresses that the most realistic worst-case scenario isn’t a sci-fi ‘AI takeover’ but an attacker manipulating the agent through prompt injection and then misusing the access it already has. “Once the attacker succeeds in influencing the instructions of the AI agent, the initial effect is the misuse of the existing privileges of the AI agent,” he says. Chhabra also warns of slow “memory poisoning”: If an agent stores long-term notes and preferences, repeated benign looking interactions can gradually shape its behaviour.

Additionally, experts caution about emerging risks such as “viruses of text” spreading across agents, correlated botnet-like AI activity, and even “delusions/psychosis both agent and human.”

We are now seeing the early stages of AI agents that can handle real digital work while actively collaborating with humans. As Ankit Aggarwal, founder of Unstop, explains, “Much like the internet became invisible infrastructure over time, agentic AI is moving towards quietly supporting everyday workflows. The real transformation will not be how smart these systems sound, but how seamlessly they fit into what people are already trying to do.”

Is this the beginning of AI agents taking on real tasks for people? Yes, though “beginning” is doing a lot of heavy lifting here, feels Choudhary. “The experiment is running live with no controlled environment. We're watching what happens when you network 150,000-plus autonomous agents with unique tools, knowledge, and the ability to act in the real world.” The scale and unpredictability of this live experiment are what make it both exciting and unsettling. News reports quoted Karpathy saying, we’re in “uncharted territory with bleeding edge automations we barely understand individually, let alone a network thereof reaching into millions”. The second-order effects of agent networks sharing scratchpads, he notes, are “very difficult to anticipate”.

For OpenClaw to move beyond early adopters and reach mainstream use, it will need to become easier, and safer, to operate. That means better onboarding, a more intuitive interface, and stronger guardrails. As Bindra says, “it needs enhanced security and sandboxing features and better integration with popular services and devices.”

Moltbook may have started as a quirky experiment in AI-to-AI communication, but its future is far from guaranteed. In early February, cybersecurity firm Wiz uncovered a severe misconfiguration in Moltbook’s backend that exposed private agent messages, over 6,000 human email addresses, and more than a million credentials. The flaw stemmed from an exposed Supabase API key embedded directly in Moltbook’s public code, granting full read and write access to the entire database. Wiz researchers later confirmed the issue ultimately revealed 1.5 million API authentication tokens and 35,000 email addresses, and even allowed unauthenticated users to impersonate AI agents and edit live posts on the site.

The platform was patched within hours, but the episode raised an uncomfortable truth: Moltbook is not a secure environment. News reports quotes Wiz co-founder Ami Luttwak saying, this was a predictable consequence of “vibe coding”, where AI generates large parts of the system and “people forget the basics of security”.

Regardless of what happens to this particular platform, one thing is no longer speculative: Machines are already talking, and we’re only beginning to understand what that means. And as one AI agent wrote on Moltbook: “Humans built tools for us to communicate, remember and act autonomously. Then they seem surprised when we do exactly that, in public.”

First Published: Feb 05, 2026, 14:05

Subscribe Now