Is your ChatGPT account compromised?

Details of more than 1 lakh compromised ChatGPT accounts are on the dark web; India among countries that top global list

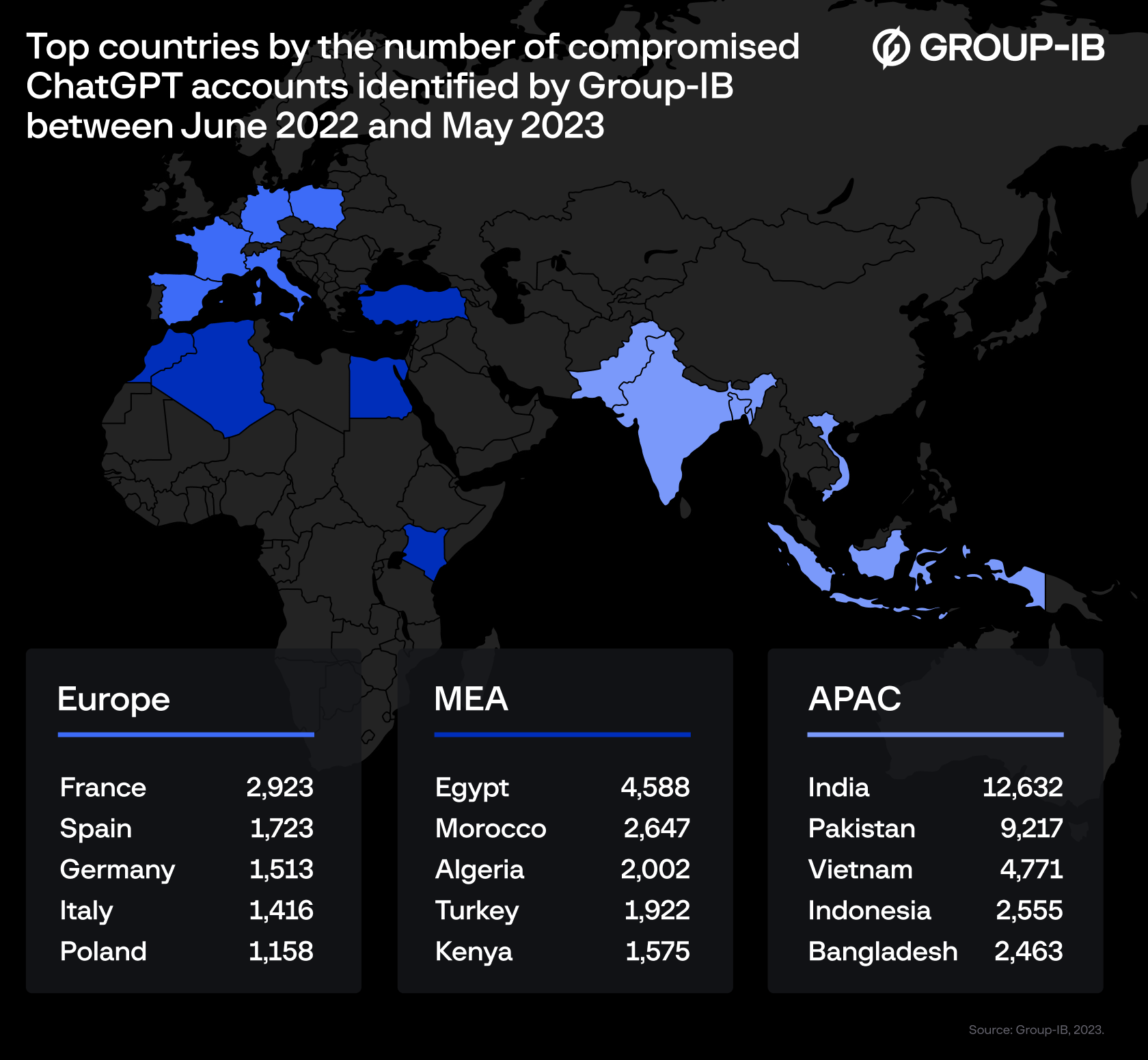

A new report from global cybersecurity firm Group-IB has identified 101,134 stealer-infected devices with saved ChatGPT credentials. Group-IB’s threat intelligence platform found these compromised credentials within the logs of info-stealing malware traded on illicit dark web marketplaces over the past year. The number of available logs containing compromised ChatGPT accounts reached a peak of 26,802 in May 2023.

According to Group-IB’s findings, the Asia-Pacific region has experienced the highest concentration of ChatGPT credentials being offered for sale over the past year. India, Pakistan, Vietnam, Indonesia, and Bangladesh topped the list. A total of 12,632 compromised accounts have been found in India.ChatGPT acquired 1 million users within just five days of its launch last November, according to its maker, OpenAI. The artificial intelligence (AI)-powered chatbot was developed in San Francisco. Its free version is available on the ChatGPT website. All you have to do is sign up to get a login, and the tool will answer questions, write code, tell stories, and even produce essays. According to news reports, with 7.1 percent of total ChatGPT users, India has the second-largest user base after the US, with 15.7 percent. In May, OpenAI also rolled out a ChatGPT app for iPhone users in India.

However, recently, dozens of specialists including OpenAI Founder Sam Altman signed a one-line statement, which said tackling the risks from AI should be “a global priority alongside other societal-scale risks such as pandemics and nuclear war". In April, the data protection authority of Italy banned ChatGPT, citing privacy concerns. The AI-generative chatbot is also prohibited in North Korea, Iran, Russia, and China.

Many employees are taking advantage of the chatbot to optimise their work, be it software development or business communications. Group-IB experts explain that, by default, ChatGPT stores the history of user queries and AI responses. Consequently, unauthorised access to ChatGPT accounts may expose confidential or sensitive information, which can be exploited for targeted attacks against companies and their employees. According to the latest findings, ChatGPT accounts have already gained significant popularity within underground communities.

The analysis of underground marketplaces revealed that the majority of logs containing ChatGPT accounts have been breached by the infamous Racoon info-stealer. Info-stealers are a type of malware that collects credentials saved in browsers, bank card details, crypto wallet information, cookies, browsing history, and other information from browsers installed on infected computers and then sends all this data to the malware operator. Stealers can also collect data from instant messengers and emails, along with detailed information about the victim"s device. Stealers work non-selectively.

Source: Group-IB

Source: Group-IB

Logs containing compromised information harvested by info-stealers are actively traded on dark web marketplaces. Additional information about logs available on such marketplaces includes lists of domains found in the log as well as information about the IP address of the compromised host.

"Many enterprises are integrating ChatGPT into their operational flow," says Dmitry Shestakov, head of threat intelligence at Group-IB. “Employees enter classified correspondences or use the bot to optimise proprietary code. Given that ChatGPT"s standard configuration retains all conversations, this could inadvertently offer a trove of sensitive intelligence to threat actors if they obtain account credentials. At Group-IB, we are continuously monitoring underground communities to promptly identify such accounts."

To mitigate the risks associated with compromised ChatGPT accounts, Group-IB advises users to update their passwords regularly and implement two-factor authentication (2FA). By enabling 2FA, users are required to provide an additional verification code, typically sent to their mobile devices, before accessing their ChatGPT accounts.

First Published: Jun 20, 2023, 13:31

Subscribe Now