AI: The ghost in the stock market machines

As India's capital markets become more dependent on AI and algorithms, insiders call for regulations to mitigate risks

In April, videos of Ashishkumar Chauhan, the MD and CEO of the National Stock Exchange (NSE), recommending stocks went viral on social media. The video, with the NSE logo, looked genuine enough to influence gullible investors with stock-trading tips. In reality, it was a deepfake using sophisticated technologies to replicate Chauhan’s voice and face. (NSE employees are not authorised to recommend stocks or deal in them.)

In July, the Bombay High Court directed social media platforms to remove all accounts infringing upon the NSE trademark and directed social media intermediaries to take action against fake videos.

Chauhan’s fake video was not a stray case in which artificial intelligence (AI) or deepfake was used to trap investors. In January, fake videos of Nimesh Shah, MD of ICICI Prudential, promoting stocks, were found on Facebook Ads. Deepfakes use deep learning techniques that can manipulate images, audios and videos, leading to impersonation, misinformation, false representation and profiteering.

There are several impersonation videos doing the rounds, created with AI tools, that show popular and reputable people recommending stocks. There is also the extensive use of apps mimicking well-known companies, which dupe investors looking for quick and high returns.

In September, the Directorate of Enforcement (ED) released details of cyber investment scams, amounting to ₹25 crore. For instance, a businessman in Noida was cheated of ₹9.09 crore by scamsters who had added him to a WhatsApp group named GFSL Securities Official Stock C 80. A doctor in Bhatinda, Punjab, was defrauded of ₹5.93 crore after he downloaded a fake app of GFSL Securities while browsing Facebook, and transferred the amount to invest in stock markets. A woman in Faridabad, Haryana, was cheated of ₹7.59 crore after scamsters duped her to invest in stocks through fake apps.

“A similar modus operandi has been adopted by fraudsters in various other FIRs to cheat innocent persons by luring them to transfer their hard-earned money on the pretext of investments in high-return yielding financial products through fraudulent apps," the ED said in a press statement.

AI can potentially reduce the cost of financial advice, and the need for human advisors, thus enhancing accessibility and streamlining customer interactions through automated responses. The adoption of AI in Indian capital markets has brought significant changes to how trading and investment decisions are made. It has, however, also ushered in a new era of deception and fraud. "¨"¨

“AI’s potential for misuse has come to light, particularly in stock markets where it can enable large-scale impersonation at low costs. This poses a significant threat globally making it crucial to implement regulations such as watermarking AI-generated content to prevent misuse," says Parminder Varma, whole-time director and chief business officer, Sharekhan by BNP Paribas.

Hari Nair, deputy CTO, m.Stock by Mirae Asset Capital Markets, disagrees that AI has become a menace for stock markets, and feels AI continues to be a transformative enabler in the broking industry. AI’s primary use cases revolve around enhancing processes such as KYC (Know Your Customer), digital marketing, customer profiling, and customer engagement. However, Nair acknowledges that impersonation remains a significant challenge. “Currently, AI’s contextual understanding of the Indian broking industry is limited, which means there are still risks associated with not addressing all potential use cases effectively," he says.

ED investigations conducted under the Prevention of Money Laundering Act (PMLA) reveal a pattern of scams. First, victims are lured on social media with false promises of high returns on their investments, allotment of IPOs through special quotas, etc. Once the victims express interest, scamsters add them to WhatsApp or Telegram groups, which have fake members who share fabricated success stories. These groups have names similar to well-known apps and financial institutions. Once the victims are convinced about the credibility of the success stories provided by group members, they are asked to install apps to make investments in fake IPOs and stocks, by transferring money to bank accounts of shell companies. To build trust, the victim might initially get good returns, which encourages them to invest higher amounts.

According to ED, the Golden Triangle—at the intersection of Thailand, Laos, and Myanmar—is being exploited by cyberfraud operations, including cyber investment scams. Criminal networks force victims to work in call centres or engage in online scams, under harsh and coercive conditions.

Can AI lead to a financial crisis? Certainly yes. US Securities and Exchange Commission (SEC) Chair Gary Gensler told the Financial Times in October 2023 that, without swift intervention, it was “nearly unavoidable" that AI would trigger a financial crisis within a decade.

The threats of AI disruptions in the world of stock markets go well beyond deepfake videos and investments scams. Fast-paced adoption of AI without regulations and checks can create new tail risks, amplifying existing ones in financial institutions.

The threats of AI disruptions in the world of stock markets go well beyond deepfake videos and investments scams. Fast-paced adoption of AI without regulations and checks can create new tail risks, amplifying existing ones in financial institutions.

“With decisions being made by algorithms, the possibility of black-swan events causing drastic market falls have increased, primarily since decisions can be taken and executed in seconds, giving little time for authorities and regulators to react," says Shravan Shetty, managing director, Primus Partners India. This specifically impacts retail investors, who do not have access to such tools to react quickly.

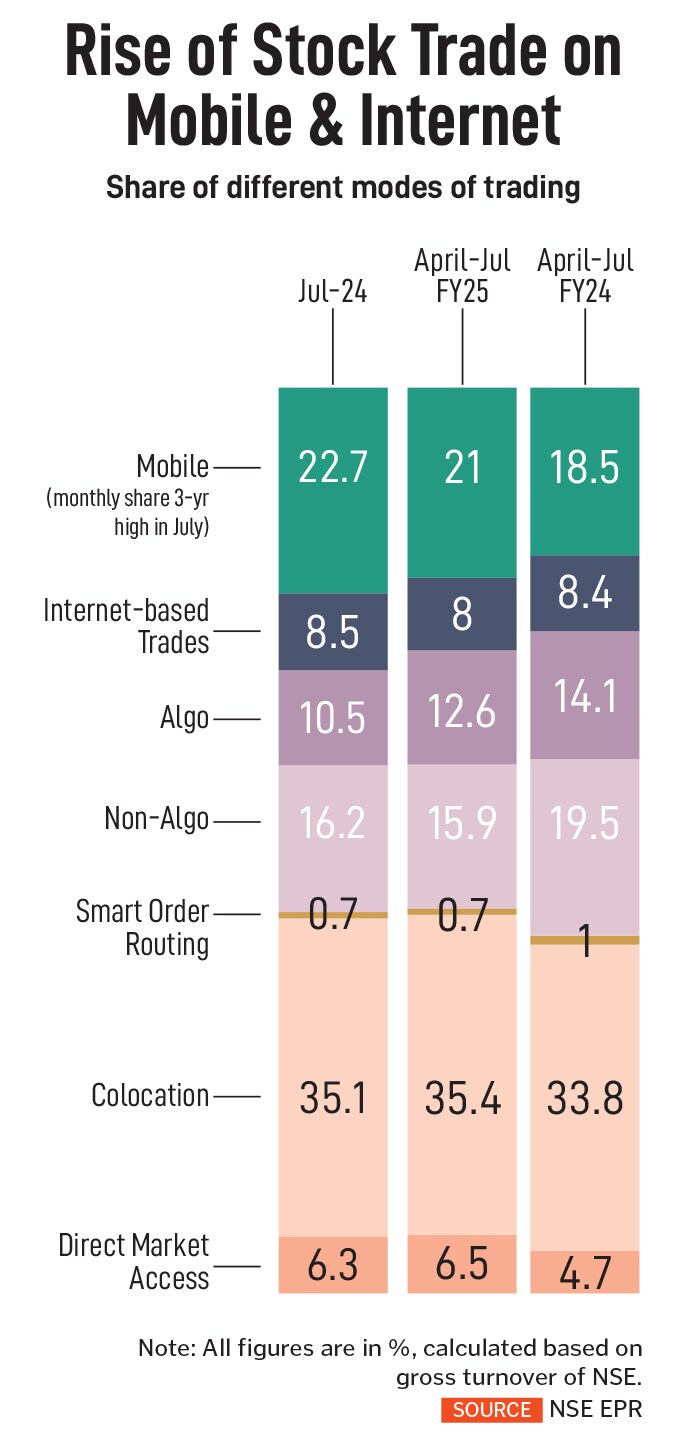

AI-driven trading algorithms can also exacerbate market volatility. High-frequency trading (HFT), which is a form of algorithm trading, is gaining popularity in India with the volumes of future and options (F&O) trading spiking over the last few years. “These algorithms often react to market data and news at speeds much faster than human traders, leading to rapid price swings. This behaviour can be particularly pronounced during IPOs, where initial trading can be heavily influenced by automated trading strategies that amplify price movements based on limited data inputs," says Supratim Chakraborty, partner, Khaitan & Co.

When many investors follow similar AI-generated signals, it can create bubbles or crashes based on algorithmic trends, not fundamental values. Algorithms reacting to market data in real-time can lead to rapid buying and selling, which might amplify price movements. “Implementing circuit breakers or cooling-off periods can help mitigate this issue by providing time for human intervention and assessment," says Amit Rahane, partner, forensic and integrity services, EY India.

An increasing reliance on AI systems raises several risks, along with ethical issues, says Rahane. The decisions of AI models can be erratic, posing challenges in assessing whether these models prioritise the interests of brokers or companies that create them, over their clients. Additionally, there is the possibility of systemic risks emerging from the financial sector’s reliance on a limited number of core models. “This could lead to a ‘monoculture’ scenario where numerous market players depend on identical datasets, amplifying the risks of widespread impact if those models fail or behave unexpectedly," Rahane explains.

Chakraborty says the key concerns of increased AI adoption are data privacy, security and market manipulations also, AI systems can inadvertently perpetuate biases present in the training data, leading to discriminatory practices in trading and associated decisions. “This raises ethical concerns," he says.

The Indian stock markets regulator, the Securities and Exchange Board of India (Sebi), has stepped up its mechanisms to adopt AI-backed technologies, while tightening norms so that over-dependency on AI does not expose capital markets to severe threats. Currently, Sebi uses AI for applications such as fraud detection, risk assessment, and customer service enhancements while planning to fasten the process of IPO approvals using AI. Sebi’s Virtual Assistant (Seva)—an AI-based conversation platform for investors—provides general information about the securities market, the latest master circulars, and the grievance redressal process.

While acknowledging that AI tools have proven to be useful for summarising and analysing data, boosting efficiency, Sebi has proposed more disclosures by investment advisors (IA) and research analysts (RA). In August, it proposed that an IA/RA who uses AI tools for servicing clients must provide complete disclosures to them regarding the extent of the use of such tools. Sebi has also stressed that the “responsibility of data security, compliance with regulatory provisions governing investment advisory services/research services lies solely with the IA/RA, irrespective of the scale and scenario of IA/RA using AI tools".

Rahane feels there may be still some room for improvement. “Introducing stringent testing and validation of AI models, enforcing transparency and explainability in AI-driven decisions, mandating stress tests, and developing comprehensive risk management frameworks are some areas that can be closely looked at," he says.

Advanced markets like the US, UK, and Singapore have more widespread adoption of sophisticated AI tools in capital markets, but their regulatory frameworks are more matured.

Adoption of AI in Indian stock markets has been relatively slower, says Arindam Ghosh, co-founder, Alphaniti Investment Advisors. “In India, we have also seen the introduction of a series of consultative papers, policy changes and significant reforms being implemented with the promise of more to come," he says.

While global quantitative hedge funds are already using AI for investment decisions, Nasdaq recently launched the world’s first AI-powered order type approved by the US Securities and Exchange Commission. “In India, AI is primarily employed in areas outside of core trading activities. In contrast, other markets have integrated AI directly into trading processes, such as using dynamic stock recommendations powered by AI," says Mirae’s Nair.

Nair does not see the market being negatively impacted by technology trends rather, it will complement existing systems. However, there will be regulations on how to prevent AI from destabilising the stock market. “We anticipate increased engagement with AI in the algorithmic trading space, which is already based on automated patterns. This represents a natural progression for AI usage. Risks will be identified and mitigated as part of a continuous process," he says.

First Published: Sep 27, 2024, 12:15

Subscribe Now