Building future workplaces: Elevating human potential with AI as a trusted ally

The adoption of AI in workplaces promises to enhance productivity and empower employees, but addressing concerns around data security and ethical use is essential for building the human-AI trust

Rahul Sharma, AVP - Platform, Service Cloud, Heroku and Slack

Rahul Sharma, AVP - Platform, Service Cloud, Heroku and Slack

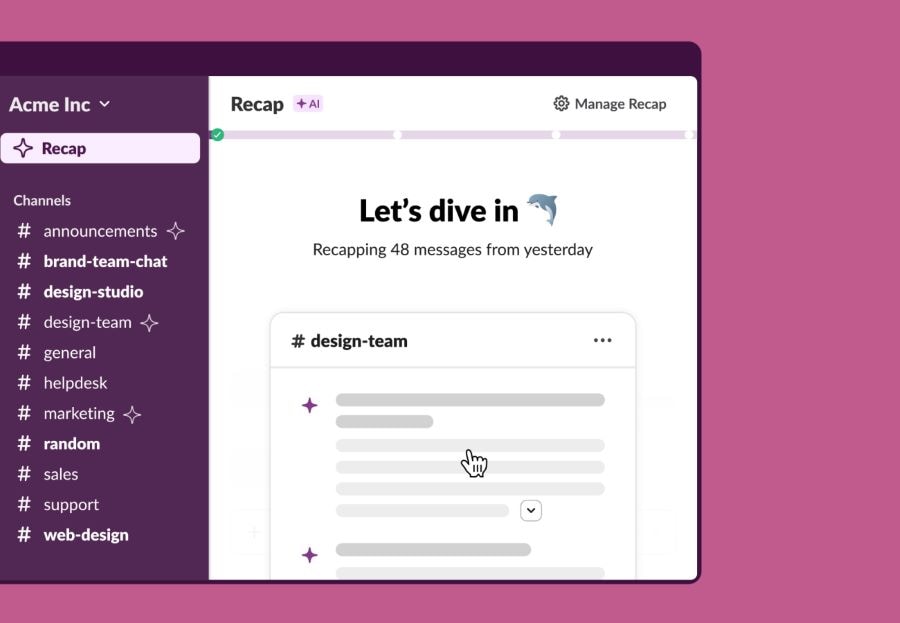

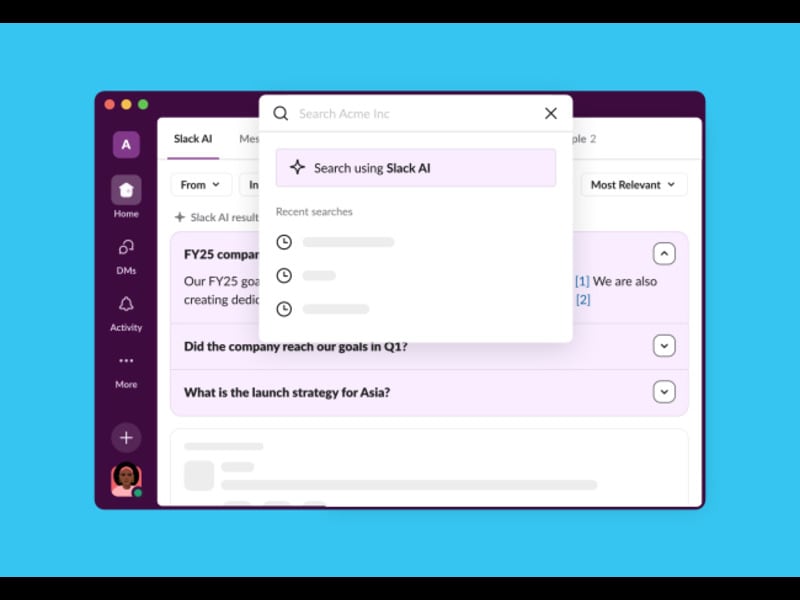

Artificial Intelligence (AI) is transcending its boundaries in 2024, evolving from a mere concept to a transformative force in workplaces around the world. The introduction of AI tools that can act as virtual assistants, capable of answering questions, generating content, and automating actions, has ignited a wave of excitement, promising to redefine what everyday work looks like.

A recent Indeed report highlights that companies in India foresee a bright future with the adoption of AI, with more than 7 in 10 respondents saying they expect tech to personally empower business leaders and themselves. Slack’s Workforce Index research revealed that 94% of executives recognise that incorporating AI into their company is an urgent priority, and the use of AI tools in the workplace is rapidly on the rise - one in four desk workers globally saying they have tried AI tools for their work.

Along with the excitement, however, it is imperative to acknowledge and address the trust concerns that exist. There are legitimate concerns around the security of data once it is fed into AI tools, not to mention the accuracy or usefulness of what comes back when hallucinations occur. History has taught us valuable lessons about the concerns of embracing technological advancements at all costs, often leaving people behind or exposed to unforeseen risks. As AI continues to evolve and become more sophisticated, we must take a proactive stance to harness its power while mitigating the risks. The solution lies in placing humans at the helm of AI, ensuring that our judgement and values remain the guiding force.

While engaging in every AI interaction or reviewing every AI-generated output may be unrealistic, we can design robust, system-wide controls that empower the technology to focus on high-judgement tasks that require human oversight.

Imagine a scenario where AI systems review and summarise millions of customer profiles, unlocking unprecedented insights and streamlining processes. Simultaneously, humans are empowered to lean in and apply their judgement in ways that AI cannot, building trust and ensuring that decisions are aligned with ethical principles.