In India, 81.9 percent content actioned on Facebook is spam

Facebook's interim transparency report for India, released after Google and Koo, states that country-level granular data is less reliable and is 'directional best estimate'

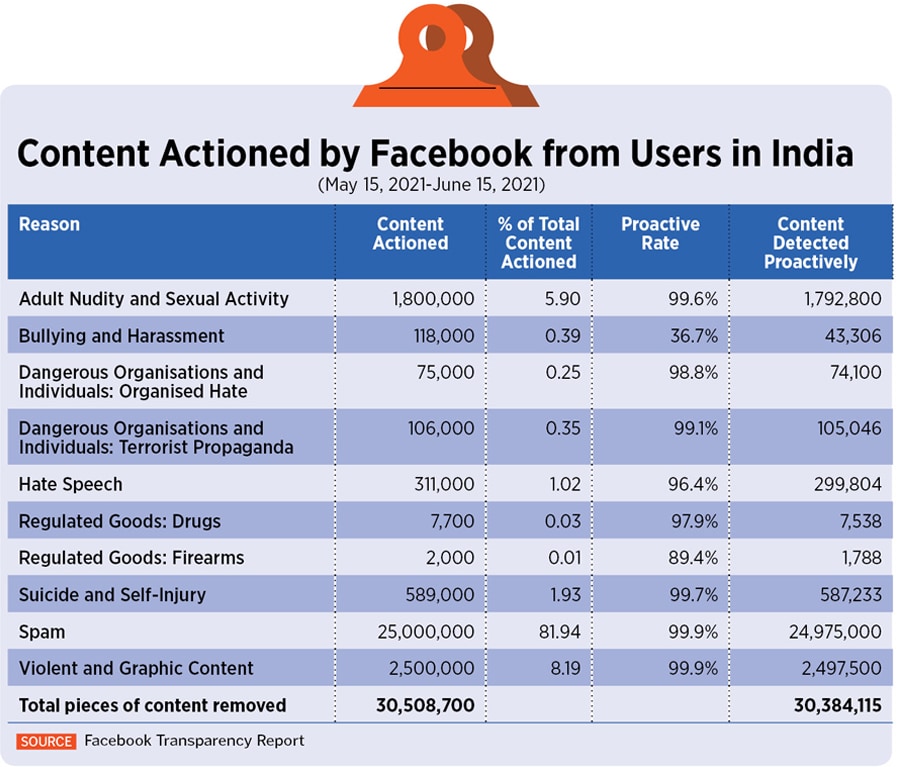

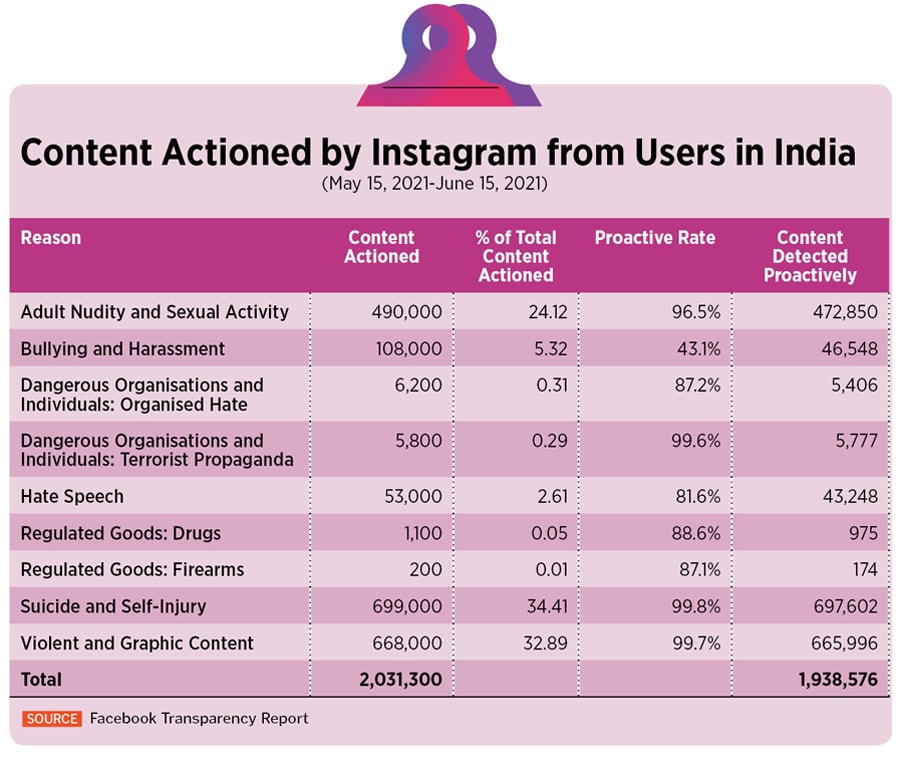

Of the 30.5 million pieces of content by users in India that Facebook actioned between May 15 and June 15, 81.9 percent was spam. This was followed by violent and graphic content which accounted for 8.2 percent of all content actioned. On Instagram, on the other hand, of the 2.03 million pieces of content that were removed, 34.4 percent were actioned for showing suicide and self-injury followed by 32.9 percent posts that were actioned for showing violence and graphic content.

Actions on content (which includes posts, photos, videos or comments) include content removals, or covering disturbing videos and photos with a warning, or even disabling accounts. Problematically, Facebook has not given the total number of pieces of content reported by users, or detected by algorithms, thereby making it harder to assess the scale at which problematic content is flagged (if not actioned) on Facebook.

Facebook released its interim report for the period from May 15 to June 15, 2021 at 7 pm on July 2, about five weeks after the deadline for compliance with the Intermediary Rules lapsed. The final report, with data from WhatsApp, will be released on July 15. Apart from Google, Facebook and Koo, no other social media company, including Indian entities such as MyGov and ShareChat have published their transparency reports.

Facebook actions content along 10 categories on its main platform while on Instagram, it actions along nine categories. The only point of difference is spam. As per the report, Facebook is working on “new methods" to measure and report spam on Instagram.

Does Facebook fare better than Instagram at automatically detecting problematic content?

In the report, the social media behemoth has also given a “proactive rate", that is, percentage of reported content that Facebook had itself flagged using machine learning before users reported it. Facebook’s proactive rate was more than 99 percent across most of the metrics except bullying and harassment (36.7 percent), organised hate by dangerous organisations and individuals (98.8 percent), hate speech (96.4 percent), and content showing regulated goods such as drugs (97.9 percent) and firearms (89.4 percent).

Unlike Facebook, Instagram did not perform as well but was a bit better at detecting bullying and harassment (43.1 percent), at least in percentage terms. But in absolute numbers, Facebook had automatically detected 43,306 pieces of content while Instagram had automatically detected 46,548 pieces of content. The reason is simple: Facebook actioned 15 times the content that Instagram did. In terms of absolute numbers, the factor is similar. Facebook detected 30.38 million pieces of content automatically compared to Instagram’s 1.94 million, again a factor of 15.

The reason might be simple: The same machine learning algorithms power both Facebook and Instagram’s automatic detection.

Koo has given minimal data in its report

Koo’s transparency report, on the other hand, is for the month of June and gives bare minimum information compared to Facebook and Google. Of the 5,502 Koos that were reported by users, Koo removed 1,253 (22.8 percent) of them. Similarly, of the 54,235 Koos that were detected through automated tools, 1,996 (3.7 percent) were actioned.

Problematically, Koo has included ignoring a flagged piece content within the action it has taken, thereby making it unclear how effective human reporting and automated content moderation actually are.

Comparing transparency reports is impossible

Two days ago, Google too released its transparency report for the month of April. In it, it gave the details of complaints received via its web forms. It did not give details of content that was automatically taken down. Koo released its report for the month of June yesterday.

Both Google and Facebook have said that there will be a lag in publishing transparency reports—two months for Google and 30-45 days for Facebook—to allow for data collection and validation. For Google, the reporting process for the transparency report will continue to evolve, as per a statement by a Google spokesperson.

Earlier, Forbes India had pointed out that the contours of the transparency reports have not been laid down. The outcome of that is visible on comparing Google and Facebook’s reports it is impossible to compare them. The metrics that have been considered are completely different across the two companies. While Facebook has focussed on reasons such as hate speech, nudity, spam as reasons in its report, Google has focussed on largely legal reasons such as copyright or trademark infringement, defamation, counterfeit, etc.

Also, the reporting cycle is different: Google has reported it for a calendar month while Facebook went with mid-month cycle. This also means that neither of the two reports can be compared with the global reports that the two companies release as Google’s is released twice a year and Facebook releases its report every quarter. Neither company gives global monthly data.

In case of Google, apart from YouTube, it is not even known which products/platforms have been considered as significant social media intermediary by Google in its data. The Google spokesperson did not answer this question by Forbes India.

Another problem that Forbes India had highlighted earlier was how a social media company ascertains whether the data is related to Indian users and if the content in question is restricted to content about Indian users, posted by users in India, or is it content that is reported by users in India irrespective of its origin.

In the report, Facebook has pointed out that country-level data may be less reliable since the violations are “highly adversarial" wherein bad actors may often mask their countries of origin to avoid detection by Facebook’s systems. Previously, the platform has also maintained that volume of the content that is actioned can be misleading. For instance, if there is a spammer who shares 10 million posts with the same malicious URL, the content actioned number would show an enormous spike.

First Published: Jul 02, 2021, 21:26

Subscribe Now